If you haven’t read them already, here’s part 1 and part 2 of my HP posts. Those 2 posts focused more on the marketing aspect of HP and networking. My intent in this post is to discuss more of the technical approach that HP is taking with regards to networking, with a main focus on the datacenter. This post, and the other 2 came into being due to my direct involvement with Gestalt IT’s Tech Field Day 5 out in San Jose, California.

If you haven’t read them already, here’s part 1 and part 2 of my HP posts. Those 2 posts focused more on the marketing aspect of HP and networking. My intent in this post is to discuss more of the technical approach that HP is taking with regards to networking, with a main focus on the datacenter. This post, and the other 2 came into being due to my direct involvement with Gestalt IT’s Tech Field Day 5 out in San Jose, California.

When it comes to data centers, HP is focusing on 4 things. They are:

1. Server virtualization

2. Managing and provisioning the virtual edge

3. Converged network infrastructure

4. Environmental

Make no mistake, HP is pushing hard in the data center to be the number 1 networking vendor. They are going to compete with Cisco and other vendors in categories other than price. They have some technology that they are fairly proud of. The bulk of that technology appears to come from the 3Com purchase, and as they roll out more of this technology, you see what a good acquisition that was for HP. There is, without a doubt, more technology to come from HP. The question is, can they compete once you get beyond the “we’re not Cisco” pitch?

I will say that HP wants to inter-operate with just about anyone they can. They are pushing a standards based philosophy with all of their products. There are instances of proprietary or semi-proprietary functions within the HP solutions, but not an excessive amount. You can be pretty certain that HP will be able to talk to your hardware no matter the vendor. As an added bonus, HP pretty much gives you every feature available on their switches and routers. They don’t nickel and dime you with feature sets or upgrades. The only licensing uptick would be for IMC(management).

With that, let’s take a look at their data center focus:

1. Server virtualization – HP notes that server virtualization is really about maximizing the use of your hardware, which is mainly CPU utilization. However, they point out that the “killer app” when it comes to virtualization is NOT getting more CPU utilization. It is actually “vmotion”, or the ability to move server instances(VM guests) from one physical box to another in minimal time. While vmotion is a great feature, it introduces a few changes in how we consider data center design.

*** Note: I am only referencing VMware when it comes to virtualization. I realize other vendors like Microsoft and Citrix have their own virtualization products, but the bulk of what I am hearing from vendors like HP is centered around VMware.

A. Traffic patterns are shifting from “north-south” to “east-west” due to virtualization. For a more detailed explanation of this, see Greg Ferro’s post on data center traffic patterns.

B. The ever increasing number of VM guests that are present on an ESX/ESXi host cause an increase in the amount of traffic flowing out of a physical server or blade enclosure onto the network. As a result, the bandwidth requirements are higher per physical box or port than they were in the past when a single OS resided on a physical server.

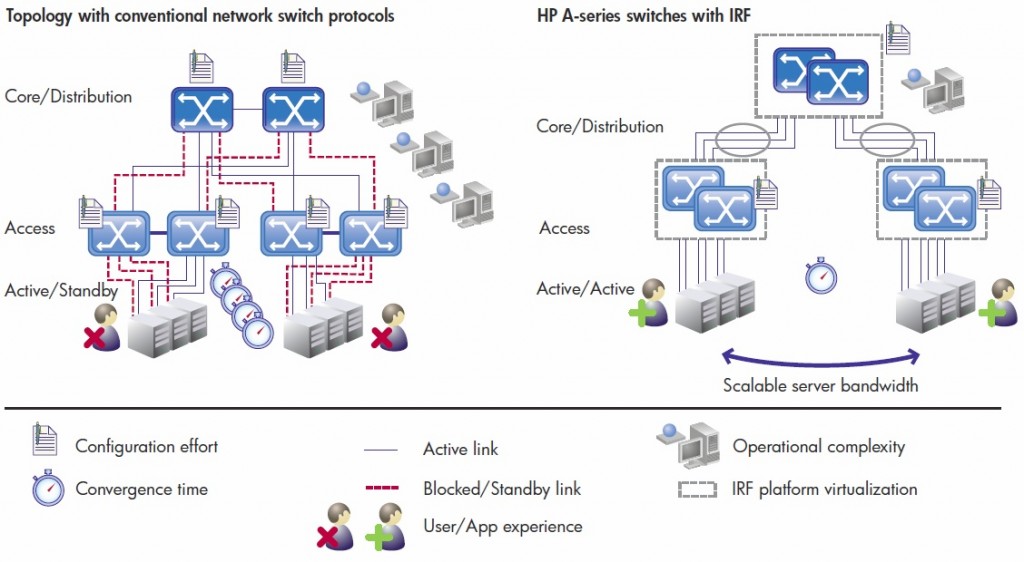

In order to handle the increase in east-west traffic and get away from the traditional 3 tier switch model(Core, Distribution, Access), HP has developed their own layer 2 multipath technology called IRF(Intelligent Resilient Framework). You can read an in-depth whitepaper on IRF here.

IRF is really just an advanced stacking technology. It turns a pair of switches into a single switch. All A series switches from HP are IRF capable. One of the benefits of IRF is that it turns a pair of switches into a single logical switch. If you are thinking that sounds a lot like Cisco’s VSS technology, you are correct. In fact, Ivan Pepelnjak wrote a post on IRF back in January of this year that compares IRF with VSS, vPC, and Juniper’s XRE200.

A couple of interesting things about IRF are:

A. You can run TRILL, SPB/PBB on top of it. Or I should say, you WILL be able to run TRILL and SPB/PBB when they are finalized.

B. IRF can connect switches up to 100km apart. Although I am not sure what the usable application of that is, the capability is still there.

C. IRF failover can occur in 2ms or less.

D. You can have up to 24 IRF links between a pair of A12500 devices.

E. Multi active detection (MAD) – This is used if connectivity between the 2 IRF switches is lost. 3 methods can be used to prevent a dual master scenario where each IRF switch(master and slave) think they are the only live switch and both assume master roles:

1) LACP – HP uses extensions within the LACP standard. They extend the protocol with a heartbeat, but it only works with HP hardware. If this heartbeat between the two switches is lost, the slave shuts off its ports and waits until the link is restored.

2) BFD – Bidirectional forwarding detection. Using either an out of band link between the 2 IRF switches or using a standards based LACP link with another device other than the neighboring IRF switch, the HP switch can determine if the other IRF device is still online .

3) ARP – Using reverse ARP, the HP switch can interoperate with another switch that may only understand spanning tree and determine if the other IRF switch is still online.

2. Managing and provisioning the virtual edge – HP is trying to reduce the complexities involved in standing up additional VM guests as well as managing them. They want to take away the total time to provision a new guest by removing some of the silos involved in the process. This is similar to how Virtual Connect is designed to put more networking flexibility in the hands of the server/blade enclosure administrator. They also want to make it easier to move a VM guest from one host to another.

Consider the following items that are a part of moving a VM guest from one host to another:

A. Virtual machine

B. Virtual NICs

C. Virtual LANs

D. Vmware Port Groups

E. vSwitches

F. Physical NICs

G. Physical Switches

You also have to look at what tools you use to manage all the various pieces of a physical and virtual environment. HP offers up IMC(Intelligent Management Center) to solve that problem. IMC is more than just “red light/green light” to use the words of HP. It is configuration management, RADIUS server, NAC(network access control), wireless, and other types of management all rolled up into one product.

2 additional things to note:

1. sFlow is built in to every one of HP’s networking products.

2. HP is going to embrace standards based approaches to negate the Cisco Nexus 1000v but won’t comment on how they are going to do that, or what they will use. In other words, they are working on it.

3. Converged network infrastructure – It is a very real possibility that the days of having a separate Fiber Channel network for storage are numbered. Vendors like Cisco, Brocade, and HP are supporting Fiber Channel over Ethernet(FCoE) now. Voice traffic was transitioned over to the Ethernet side several years ago and is now widely deployed around the world. You see far fewer separate voice networks out there these days. HP is guessing the same will be true for storage in the coming years. Some things to consider from HP:

A. They will combine storage with Ethernet(FCoE) utilizing DCB standards.

B. As of February when I attended Tech Field Day 5, HP doesn’t believe you can do more than 1 hop using FCoE because DCB protocols aren’t available yet.

C. HP gives 2 options for FCoE today. Either via the A5820 TOR(top of rack) switch or via the VC(Virtual Connect) Flex Fabric modules using CNAs(converged network adapters).

D. HP thinks true FCoE multi-hop will come around 2013. I’m sure companies like Cisco would disagree.

The term that HP is using for this converged storage/voice/data network is CEE, which stands for Converged Enhanced Ethernet. This was one of the terms used in the early days of DCB. DCE, or Data Center Ethernet was the other term used. HP would like to see the term CEE used across the industry in the same way that the WiFi Alliance logo is found on all WiFi Alliance CERTIFIED hardware.

4. Environmental – This is one area that I see a lot of promise from HP. It’s nice to see the enhancements to the 1’s and 0’s, but it is equally important to ensure that equipment is designed and monitored in an efficient manner. Some of the more interesting things HP is doing are:

A. Lower power and cooling requirements in servers, routers, and switches.

B. Building extra space in the top and bottom of devices to allow for better cooling. I guess you sacrifice an RU here and there with the tradeoff being less cooling required.

C. Building a “Sea-of-Sensors” by incorporating technology into all of their systems and racks to manage heat and power distribution throughout a data center. The main benefit of this being that HP can actually generate heat maps within the DC to determine the hottest spots. The future application of this is that the network will be able to dynamically move resources around to get a more balanced heat level across the entire DC. Check out this demo that just focuses on heat within a single server.

Closing Thoughts

The more I look at HP, the more I think they might be able to make a huge dent in the network market. They already sell a lot of hardware and software in the network space, but I see them selling even more in the coming years. They view Cisco as their main competitor and as long as they focus on giving customers what Cisco cannot, they should be successful. While Cisco gets hammered on price, they also don’t get credit for producing network gear that usually has a ton of capabilities. The HP hardware will have to have somewhat similar features in order to stay competitive in the long run. Of all the networking companies out there, HP probably has the most resources and can compete easier than the other companies.